- Hyland Connect

- Platform

- Alfresco

- Alfresco Forum

- What happens to Timer Intermediate Catching event ...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

What happens to Timer Intermediate Catching event inside a subprocess, if the activiti engine dies ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-21-2018 12:22 AM

When the engine is restarted, does it try to run timer task even though the subprocess that contains it; died in the last execution?

- Labels:

-

Alfresco Process Services

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-21-2018 10:37 AM

Could you explain a bit about your process and why you're asking the question? Any diagram you can include might help. I think the answer is that a timer task will be created when the transaction is committed on entering the subprocess and so it would depend on whether the engine 'dies' before that transaction is committed. I'm not sure what kind of death scenario you have in mind or why you're focused on it so I'm not sure how precise of an answer you're looking for.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-21-2018 08:10 PM

Hi Ryan,

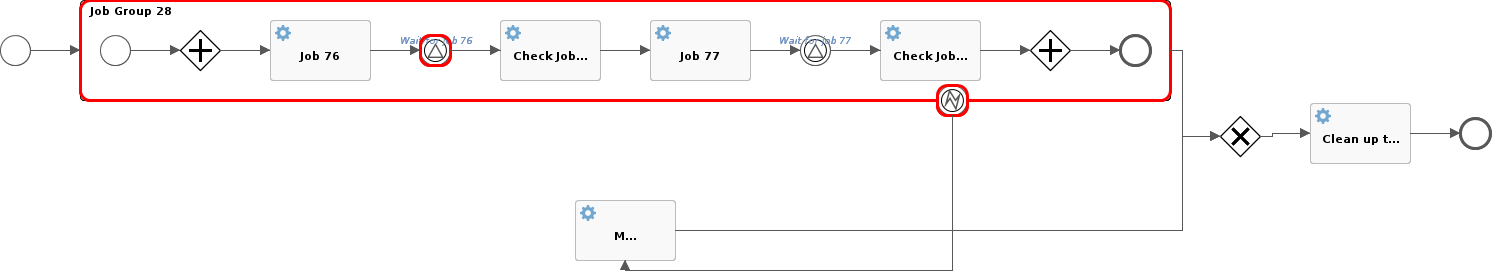

Thank you for replying. I am sorry I did not give you context about the my requirements. I am running activiti with Spring. ( With asyncJobExecutor). Right now We have following model :

Requirements are as follows :

I want to run a parallel task in conjunction to Job 76 (same for Job 77), which will report the status/progress of Job 76 and as soon as Job 76 finishes, parallel task finishes. If process instance dies , it should not picked by any other process engine sharing the same database or when same spring application restarts again ?

I came with following logic with Event based gateway which relies on signal (using from the above architecture). It is job complete signal thrown whenever Job 76 is complete.

I have following concerns :

I have following concerns :

1. If my spring application dies( killing the process instance and process engine) in between of above process execution,and then I restart the the application. Will above timer will picked again( Like by org.activiti.engine.impl.asyncexecutor.AcquireTimerJobsRunnable - ?

If yes how to disable timer tasks/events to picked again if current process instance dies ( not by other process engine sharing the same activiti database and not by same process engine when it restarts) .

2. If there is better solution to my requirement . Please let me know.

I appreciate it. ![]()

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-22-2018 09:37 AM

If you're running multiple instances of the engine you can configure them so that only one instance runs the jobExecutor. And you could configure it not to retry.

If your aim is to put a time-limit on job 76 then some kind of timer event seems like the way to go.

I'm guessing your job 76 is doing something long-running and intensive and maybe you're worried about it consuming memory and crashing the process that the engine runs in? Then presumably you don't want a retry because that would result in another crash?

If only one instance is running the jobExecutor and the long-running job crashes that instance then I would not expect any other instance to retry it. When the instance that is running the jobExecutor is restarted I am not sure whether it will retry or not. It might depend upon whether the service task is async. You could create a POC with a service task that does a System.exit to end the java process and then try restarting it and see whether it happens again.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-22-2018 09:42 AM

If crashing the process is the concern then you could also think about calling out to whatever implements the dangerous logic in a separate java process and communicating to it via messaging. Then if it failed it would not crash the java process that the engine is running in. (I'm guessing you've already thought about whether Activiti Cloud's idea of service tasks as distinct microservices would be relevant. You could implement the messaging yourself if you want to do that but not use Activiti Cloud.)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-26-2018 10:37 PM

Sorry for the delayed reply. I was trying some experiments.

If you're running multiple instances of the engine you can configure them so that only one instance runs the jobExecutor. And you could configure it not to retry.

But there are other timer tasks which I want to be picked by other process engine ( jobExecutor enabled) in a situation where current process engine crashes/dies( not because of long running task, as we are using the microservices architecture; some long running tasks are carried out in cloud; some not). ( Crash resiliency scenario). Is there a way to set the number of tries for a particular timer event or tries ? ( Using extension elements ? ).

If your aim is to put a time-limit on job 76 then some kind of timer event seems like the way to go.

I am sorry ; I did not tell the actual requirement. The actual requirement is I want to track the status/ progress ( Progress would be better) of Job 76 or any given Service task till process instance finishes or gets crashed.

You could create a POC with a service task that does a System.exit to end the java process and then try restarting it and see whether it happens again.

I read the documentation , it says service tasks ( in general other task) are executed sequentially; as exclusive jobs is set to true by default. I will try running parallel tasks by setting "async=true" and "exclusive=false". Please let me know if I need to make changes for following :

1. Do I have to modify async executor configuration ? ( For running two 2-4 parallel tasks at a time)

2. Do I have to make intermediate catch event also async=true and exclusive=false?

Thank you for your all help. ![]()

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-27-2018 11:52 AM

It is now sounding to me like the monitoring you're trying to do is better done at the service task implementation level than at the Activiti level. If you search for Activiti and monitoring you find plenty of stuff, such as the Esper example in Chapter 14 of Activiti in Action. You can use the Activiti history to find out what happened to a process. But it sounds to me like you want to be able to track in detail particular instances and what they were doing when they crashed. I'm intrigued that you're using a microservices architecture as it sounds like a possible use-case for adding traceIds and sending logs to a central point (e.g. ELK) so that you can following the logging journey across service boundaries.

The risk with running the jobExecutor on multiple instances is you might find that jobs get executed simultaneously on each. Then you get a race condition on which finishes first and the one that finishes last would end up with an ActivitiOptimisticLockingException - not necessarily a problem in itself but it might be if the execution has done something non-transactional (i.e. won't be rolled back) that you didn't want to happen twice. So it depends upon what your jobs are doing. If you're doing microservices you may be able to reduce your use of async tasks by employing messaging in your service tasks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-27-2018 01:24 PM

Another thought might be using listeners to track whatever you need to on entering and exiting the task

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

03-27-2018 01:37 PM

Thanks Ryan for all the inputs. I will try all the options that you listed and come back with the results.

- Alfresco Developer Resources in Alfresco Blog

- Critical Issue with EventSubProcessMessageStartEventActivityBehavior in Alfresco Activiti Integratio in Alfresco Forum

- Activiti signal intermediate Catching event in event sub process is not catching the signal in Alfresco Forum

- Can we add rate limit to a subprocess or an API in Activiri BPM in Alfresco Forum

- Activiti Workflow Builder- Intermediate Time catch events in Alfresco Forum

Explore our Alfresco products with the links below. Use labels to filter content by product module.